...

Write an SQL statement that will be used to retrieve data from the database. The SELECT part of the SQL statement determines the columns you will have to map in the next step.

Source column mapping

Once you have finished setting your parameters you will have to conduct the Source column mapping.

Mapping the data to columns is similar to importing excel and CSV files. The differences here are that you need to name your own cube and dimensions, because they need to be created if your data does not fit any of the already existing cubes.

When you need to import several columns as the same dimension attributes then click Show options and specify the following options:

- ID column - if attribute is unique integer ID attribute of dimension member (like issue

idattribute in this example). If ID column is not specified then dimension members will be identified by key column and IDs will be automatically generated for each new key column value. - Key column - if attribute is unique numeric or string key of dimension member (like issue

numberhere) - Name column - if attribute is longer name that should be displayed as dimension member name (if not specified then key column will be used as name column). But keep in mind that name column still needs to be unique within dimension (therefore in this case we will not use

titleas name as it might not be unique). - Ordinal column - if this attribute should be used to order dimension members.

- Property name - specify if attribute should be imported as additional property for dimension member which is identified by ID or key column (like issue

titlein this example). - When importing date or datetime field as dimension property you can specify additional options:

- Date count measure - specify measure name that should store count of imported rows in this date (in this example

Issues createdmeasure will show the count of issues that were created increated_atdate orIssues closedwill show the count of issues that were closed inclosed_atdate). - Date count dimension - specify time dimension which should be used for this measure (by default

Timedimension name is suggested).

- Date count measure - specify measure name that should store count of imported rows in this date (in this example

...

Incremental import

| Note |

|---|

Available from the flex.bi version 4.2 and in flex.bi Cloud. |

By default, SQL import will always re-import all data. During the import the old imported data will be deleted and then replaced by new returned data. If SQL SELECT returns many rows then each data import might take long time as well as reports might return incomplete data while the old data are deleted and not all new data are imported.

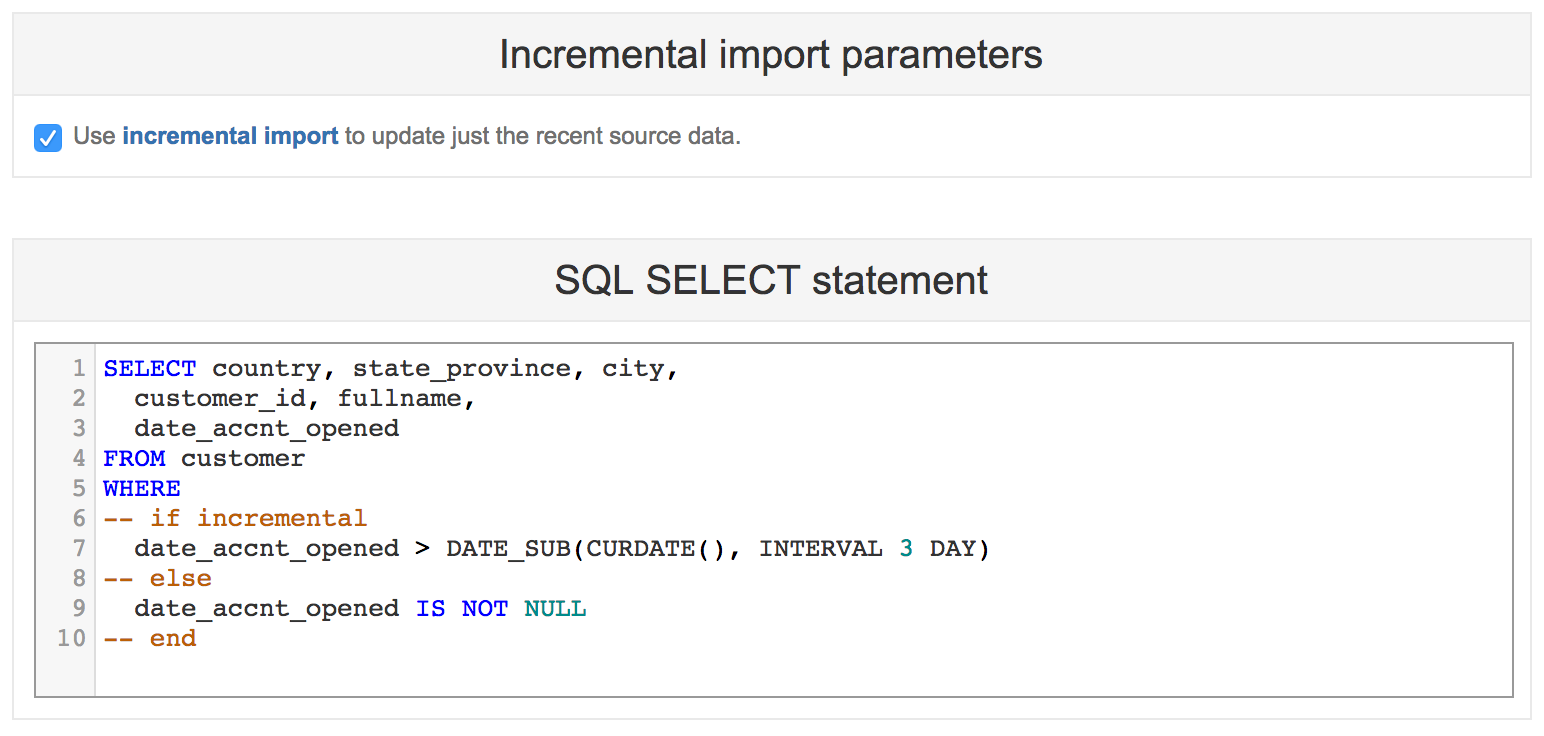

If you would like to use incremental import to update just the recent source data after the initial full import, then click Incremental import parameters and enable it:

In addition, specify the incremental import WHERE conditions in the SELECT statement. Use special -- if incremental, -- else, -- end comments (as shown in the example) to specify which lines should be included only for the incremental import and which lines only for the full import. Typically you need to specify a condition that filters just recently updated rows using the corresponding date column.

If incremental import is used then it will be required to specify a Source ID column in the source columns mapping step. Source ID column value should provide a unique results row identifier. It is used to identify when some existing imported rows in flex.bi should be replaced with updated source data during the incremental import.

If you have previously imported all data without the incremental import option, then it will not be possible to modify the source columns mapping. Therefore, at first delete all imported data for this SQL source, and then modify the source columns mapping and specify the Source ID column.

Source column mapping

To conduct the Source column mapping please visit Data mapping.

When you have finished mapping your data, press 'Start Import' button.

If your import finishes successfully, you can see the results in the Analyze tab, in the cube you imported your data to.

...